Understanding Cluster Analysis

Cluster analysis, also known as clustering, is a fundamental process in market research and data analysis. It involves grouping data points or entities together based on hidden patterns, underlying similarities, and salient features of the data. By identifying similar groups within a dataset, cluster analysis helps in discovering meaningful structures and descriptive attributes that can provide valuable insights for market segmentation, customer profiling, and product development.

Basics of Cluster Analysis

The goal of cluster analysis is to divide a set of examples into distinct clusters, where entities within each cluster are more similar to each other compared to entities in other clusters. The process involves mathematically determining the similarity or dissimilarity between data points and grouping them accordingly. The entities within a cluster share common characteristics, allowing for a deeper understanding of their relationships and behavior.

Importance of Cluster Analysis

Cluster analysis plays a vital role in market research and other fields because it helps in organizing large datasets into meaningful groups, making it easier to interpret and analyze the data. Some of the key benefits and applications of cluster analysis include:

-

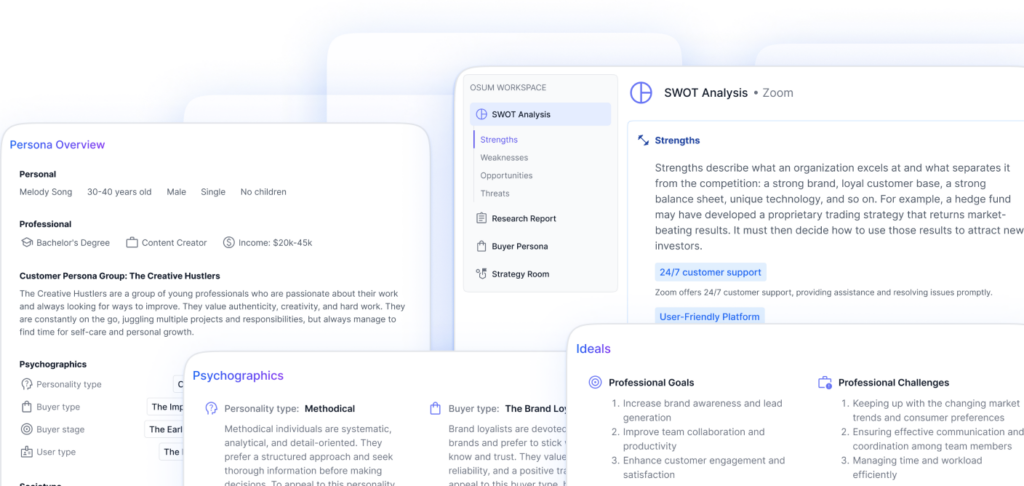

Market Segmentation: Cluster analysis enables the identification of distinct customer segments based on their preferences, behaviors, and demographics. By understanding these segments, businesses can tailor their marketing strategies to effectively target and engage specific groups of customers.

-

Customer Profiling: Cluster analysis helps in creating detailed profiles of different customer groups. By analyzing the characteristics and behaviors of each cluster, businesses can gain insights into their customers’ needs, preferences, and buying patterns. This information can be invaluable for developing targeted marketing campaigns and personalized experiences.

-

Product Development: Cluster analysis aids in developing new products or optimizing existing ones by identifying groups of customers with similar needs and preferences. By understanding the unique requirements of each cluster, businesses can design products that cater to specific segments, enhancing customer satisfaction and loyalty.

Cluster analysis offers a versatile and powerful approach for understanding complex datasets and extracting meaningful information. By uncovering hidden patterns and relationships, businesses can make data-driven decisions and gain a competitive edge in the market.

In the next sections, we will explore different types of clustering techniques, key cluster analysis methods, factors influencing cluster analysis, and methods for determining optimal clusters.

Types of Clustering Techniques

When it comes to cluster analysis, there are various techniques available for grouping data into meaningful clusters. Each technique has its own characteristics and advantages. In this section, we will explore hierarchical vs. partition clustering, density-based vs. distribution-based clustering, and fuzzy clustering.

Hierarchical vs. Partition Clustering

Hierarchical clustering is a method that aims to build a hierarchy of clusters without requiring prior knowledge of the number of clusters. It starts by considering each data point as an individual cluster and then progressively merges similar clusters until reaching a desired level of clustering. Hierarchical clustering is relatively easy to understand and implement. However, it may not always provide the optimal solution due to arbitrary decisions, difficulties with missing data, poor performance with mixed data types, challenges with large datasets, and potential misinterpretation of dendrograms (Displayr). An alternative approach like latent class analysis can be considered for more robust results.

Partition clustering, on the other hand, aims to divide the data into a fixed number of clusters, denoted as ‘K’, in advance. One of the most widely used partition clustering algorithms is K-means clustering. K-means requires the user to specify the number of clusters and iteratively assigns data points to the nearest centroid until convergence is achieved. It is efficient and effective for organizing data into non-hierarchical clusters. However, K-means is sensitive to initial conditions and outliers, and the choice of ‘K’ can significantly impact the results.

Density-Based vs. Distribution-Based Clustering

Density-based clustering methods focus on connecting areas of high example density and forming clusters based on the density connectivity. These methods are particularly useful for capturing arbitrary-shaped clusters. One popular density-based clustering algorithm is DBSCAN (Density-Based Spatial Clustering of Applications with Noise). However, density-based methods can struggle with varying densities within the dataset and high-dimensional data (Google Developers).

Distribution-based clustering, as the name suggests, assumes that the data is composed of distributions, often Gaussian distributions. In this approach, points are assigned to clusters based on the probability of belonging to a cluster, which decreases as the distance from the cluster center increases. Gaussian Mixture Models (GMM) is one of the popular techniques used in distribution-based clustering.

Fuzzy Clustering Explained

Fuzzy clustering is a technique where data points can belong to multiple clusters with varying degrees of membership. Unlike traditional clustering, which assigns each point to a single cluster, fuzzy clustering allows for a more flexible representation of data. It is particularly useful when there is ambiguity or overlap between clusters. Fuzzy clustering algorithms, such as Fuzzy C-means, assign membership values to each point, indicating the degree to which the point belongs to each cluster. This approach enables a more nuanced analysis of complex datasets.

By understanding the different types of clustering techniques, marketers can apply the most appropriate method to their specific needs. Whether it’s hierarchical or partition clustering, density-based or distribution-based clustering, or even fuzzy clustering, each technique offers unique advantages for tasks such as market segmentation, customer profiling, and product development (cluster analysis applications). The choice of the clustering technique should align with the objectives and characteristics of the dataset being analyzed.

Key Cluster Analysis Methods

When it comes to cluster analysis, several methods can be employed to uncover patterns and groupings within datasets. In this section, we will explore three key cluster analysis methods: K-Means clustering, Mean Shift clustering, and Gaussian Mixture Model (GMM).

K-Means Clustering

K-Means clustering is a widely used method of cluster analysis that requires advance knowledge of the number of clusters, denoted by ‘K’. This method aims to partition data points into K clusters, with each cluster represented by a centroid point. The algorithm iteratively assigns data points to the nearest centroid, updating the centroids until convergence is achieved.

One advantage of K-Means clustering is its efficiency in organizing data into non-hierarchical clusters. However, it can be sensitive to initial conditions and outliers. This technique is commonly used in various fields, including market research, customer segmentation, and image analysis.

Mean Shift Clustering

Mean Shift clustering is a nonparametric technique that does not require any prior knowledge of the number of clusters. It works by estimating the essential distribution of a dataset through kernel density estimation. The algorithm assigns data points to clusters by shifting them towards the peak or highest density of data points (Analytixlabs).

Mean Shift clustering is known for its simplicity and flexibility, making it useful in various applications such as image segmentation. By adapting to the underlying density of the data, Mean Shift clustering can identify clusters of different shapes and sizes.

Gaussian Mixture Model (GMM)

Gaussian Mixture Model (GMM) is a distribution-based clustering technique that assumes the data comprises Gaussian distributions. GMM assigns probabilities to data points in different clusters based on their distances from each cluster’s mean, covariance, and mixing probability. The expectation maximization technique is commonly used to compute these parameters.

GMM is particularly useful when dealing with data that may not have distinct clusters or when the underlying distribution is not uniform. It allows for more flexible cluster shapes and can capture overlapping clusters. GMM finds applications in areas such as image and text classification, anomaly detection, and customer segmentation.

By leveraging these key cluster analysis methods, analysts and researchers can gain valuable insights from their datasets. Each method offers its own advantages and is suited to different types of data and research objectives. Understanding these techniques enables marketers to effectively apply cluster analysis in various domains, including market segmentation, customer profiling, and product development.

Factors Influencing Cluster Analysis

When conducting cluster analysis, there are several factors that have a significant impact on the results. Understanding these factors is crucial for obtaining accurate and meaningful insights. In this section, we will explore three key factors that influence cluster analysis: distance and similarity measures, the impact of distance metrics, and the role of standardization.

Distance and Similarity Measures

Distance and similarity measures play a crucial role in cluster analysis. These measures quantify the similarity or dissimilarity between data samples, allowing for the grouping of similar samples into clusters. There are various types of similarity and dissimilarity measures used in data science, each suited for different types of data and analysis purposes.

One commonly used measure is the Euclidean distance, which measures the straight-line distance between two points in a 2-dimensional space. It is often used for numeric attributes and is symmetrical, differentiable, convex, and spherical. Another measure is the Manhattan distance, also known as City Block or taxicab distance, which calculates the distance based on the number of blocks that separate two places. The Manhattan distance is frequently used in KNN classification and regularization for neural networks. A third measure is the Cosine distance, widely used in text mining and natural language processing, which measures the similarity between two documents based on word frequencies.

For a comprehensive understanding of the various similarity and dissimilarity measures used in cluster analysis, refer to our article on cluster analysis techniques.

Impact of Distance Metrics

The choice of distance metric has a significant impact on the performance and outcomes of cluster analysis. Different distance metrics emphasize different aspects of the data, leading to variations in the clustering results. The selection of an appropriate distance metric depends on the nature of the data and the specific objectives of the analysis.

Distance metrics need to satisfy certain conditions, such as non-negativity, symmetry, triangle inequality, and zero distance only between identical points. These conditions ensure the reliability and validity of the clustering process. It is important to choose a distance metric that aligns with the properties of the data and the underlying assumptions of the analysis.

To gain insights into the advantages and disadvantages of different distance metrics and their impact on cluster analysis, refer to our article on cluster analysis techniques.

Standardization in Cluster Analysis

Standardization is a crucial step in cluster analysis, especially when dealing with variables that have different scales or units of measurement. Standardization transforms the data to a common scale, ensuring that no single variable dominates the clustering process due to its larger magnitude.

By standardizing the data, each variable contributes equally to the clustering process, allowing for a fair comparison and evaluation of different variables. Standardization ensures that the clustering results are not biased by the variables’ original scales, leading to more accurate and meaningful insights.

To understand the role of standardization in cluster analysis and its practical implications, refer to our article on cluster analysis techniques.

By considering the appropriate distance and similarity measures, understanding the impact of distance metrics, and implementing standardization techniques, marketing managers can effectively utilize cluster analysis to gain valuable market insights. Cluster analysis enables market segmentation, customer profiling, and product development, among other practical applications. For more information on these applications, refer to our article on cluster analysis applications.

Practical Applications of Cluster Analysis

Cluster analysis techniques have numerous practical applications across various domains, particularly in market research and customer analysis. Some of the key applications include market segmentation, customer profiling, and product development.

Market Segmentation

Market segmentation is a crucial application of cluster analysis. It involves dividing a market into distinct groups of consumers who have similar needs, characteristics, or behaviors. By analyzing customer data and applying cluster analysis techniques, businesses can identify meaningful segments within their target market. This segmentation helps businesses tailor their marketing strategies and offerings to specific customer segments, improving customer satisfaction and profitability. For more examples and insights on market segmentation, visit our article on cluster analysis examples.

Customer Profiling

Customer profiling is another valuable application of cluster analysis. It involves grouping customers based on their demographics, preferences, behaviors, and purchase history. By applying cluster analysis techniques to customer data, businesses can gain a deeper understanding of their customers. This understanding allows businesses to personalize marketing campaigns, provide targeted recommendations, and enhance customer engagement and loyalty. To explore more about customer profiling and its benefits, refer to our article on cluster analysis applications.

Product Development

Cluster analysis plays a significant role in product development as well. By utilizing cluster analysis techniques, businesses can identify market segments with specific needs and preferences. Understanding the characteristics and requirements of different customer segments allows businesses to develop products that cater to those segments. This targeted approach to product development leads to higher customer satisfaction and market success. To learn more about how cluster analysis contributes to product development, check out our article on advantages of cluster analysis.

In conclusion, cluster analysis is an indispensable tool in market research, enabling businesses to identify target markets, understand consumer behavior, and develop effective marketing strategies. By leveraging the power of cluster analysis, businesses can identify profitable customer segments, personalize marketing efforts, and allocate resources efficiently.

Determining Optimal Clusters

When conducting cluster analysis for market research or other applications, it is crucial to determine the optimal number of clusters. This helps ensure that the analysis provides meaningful insights and actionable results. Two commonly used methods for determining the optimal number of clusters are the Elbow Method and the Silhouette Analysis Approach.

The Elbow Method

The Elbow Method is often employed in K-Means clustering to identify the optimal value of K, which represents the number of clusters. The method involves plotting the sum of squared distances from the centroid (loss function) for different K values and selecting the K value at the “elbow” of the curve. The elbow point is where the curve visibly bends and the decrease in the loss function becomes less significant (LinkedIn).

By examining the Elbow Curve, you can identify the point where the addition of more clusters does not significantly improve the clustering quality. It is important to note that the elbow point may not always have a clear-cut appearance, making it necessary to consider additional evaluation methods.

Silhouette Analysis Approach

The Silhouette Analysis Approach is another valuable technique for determining the optimal number of clusters. This method calculates the silhouette score for different numbers of clusters, helping to identify the number of clusters that maximize the silhouette score. The silhouette score measures how close each sample in one cluster is to samples in neighboring clusters, providing an evaluation of the clustering quality (Analytics Vidhya).

To perform Silhouette Analysis, the average silhouette coefficient is computed for various values of K. The silhouette coefficient ranges from -1 to 1, with higher values indicating better clustering results. The peak value in the silhouette plot represents the optimal number of clusters, as it signifies the point at which the samples are most appropriately assigned to their respective clusters.

Both the Elbow Method and Silhouette Analysis Approach play significant roles in determining the optimal number of clusters in cluster analysis. While the Elbow Method provides a visual representation of the curve, the Silhouette Analysis Approach quantifies the clustering quality based on the silhouette coefficient. By utilizing these methods, marketing managers and researchers can make informed decisions about the number of clusters to use in their analyses, leading to more accurate cluster analysis applications such as market segmentation, customer profiling, and product development.